Data collection efforts within iTalk2Learn could be completed yielding more than 80 hours of transcribed audio-corpora for English (37h) and German (44h). These data-sets were divided into separate training and evaluation sets. Both sets need to be disjoint in order to evaluate performance in a fair manner and created in a continuous way (so as to choose data from across the project’s duration instead of focused on single sessions).

In previous blog-posts we introduced the measures of word-error-rate (WER) – a commonly applied measure for the performance of ASR systems – and precision/recall (PR) – a standard measure for evaluation of performance in Information Retrieval (IR).

WER is an adequate measure when looking at complete transcripts or even when measuring progress in the development of speech recognition systems and/or models. However, WER is only one side of the medal and may provide a misleading picture: all words are weighed equally in calculating this rate making errors such as misrecognising the word numerator as terminator equally important as misrecognising the word in as an. Clearly, the impact of these errors would be quite different should a system – such as the iTalk2Learn platform – react appropriately to the recognised words. This is the reason why, early on, precision and recall were selected as the primary evaluation metrics for ASR in iTalk2Learn. In order to be able to apply this metric, certain parameters had to be determined, such as:

- what should be the unit of evaluation?

- what are the key-terms whose presence/absence should be evaluated?

The unit of evaluation was determined to be on a per-session level, in order to reflect the overall architecture of the iTalk2Learn platform. Terminology from the math-domain as well as words (and non-words) reflecting cognitive load were selected as the elements for the PR-evaluations. Two further sets of words – one consisting of all words appearing in a session and the other one consisting of all content-words appearing in a session – were likewise selected for PR-evaluation to serve as a kind of intermediate evaluation point between WER and PR of key-terms.

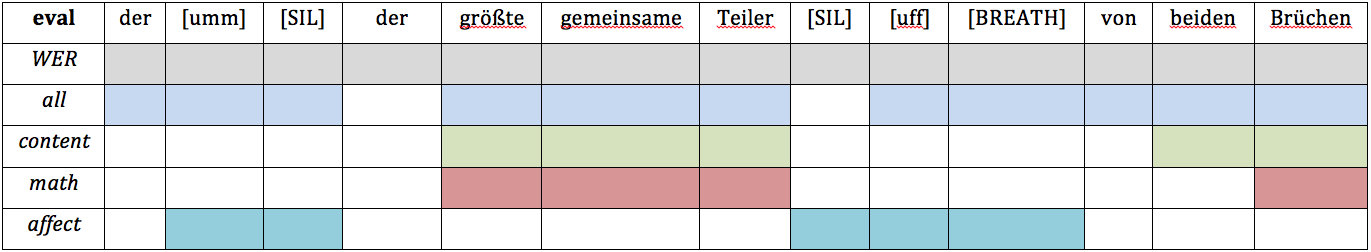

In the following example, demonstrating the difference between the various measures, these sets will be referred to as: all, content, math and affect. In the German utterance „der [umm] [SIL] der größte gemeinsame Teiler [SIL] [uff] [BREATH] von beiden Brüchen” (the [umm] [silence] the greatest common divisor [silence] [pf] [breathing] of both fractions), the following entries will be taken into account when measuring performance:

Table 1. Elements involved in evaluation

The evaluations performed are offline, in the sense that systems will be evaluated against a pre-defined test-set and will yield insights into the relationship between WER and PR performance. However, their real significance will only become tangible when comparing these evaluation results to the results of summative evaluations carried out using the live-system.

References:

[1] https://commons.wikimedia.org/wiki/File:Plastic_tape_measure.jpg